It was just another school day at Hingham High School in Massachusetts until an AI detection tool flipped the script for several students. Suddenly, they found themselves accused of cheating on their assignments using AI writing assistants. What followed was not detention or a meeting with the principal – but a lawsuit that’s sending ripples through educational institutions across America.

I’ve been watching this case unfold with a mix of fascination and concern. As someone who writes about educational technology, I can’t help but wonder: Are we ready for the brave new world where algorithms judge student work? And what happens when these digital detectives get it wrong?

The Hingham High School lawsuit isn’t just about a few students fighting back against accusations. It’s a landmark case that could reshape how schools approach artificial intelligence, student privacy, and academic integrity policies nationwide.

Let’s dive into what happened, why it matters, and what it means for students, parents, and educators navigating education in the age of AI.

Background of the Hingham High School AI Lawsuit

Picture this: A high school English teacher becomes suspicious about the quality of some student papers. They seem too polished, too advanced. Instead of just having a conversation with the students, the teacher turns to an AI detection tool to confirm these suspicions.

The result? Several students at Hingham High School in Massachusetts were accused of using AI writing tools to complete their assignments – a violation of academic integrity according to the school’s policies. But here’s where things get interesting: these students insist they wrote their papers themselves, the old-fashioned way.

In late 2023, one student and their parents decided to fight back. They filed a lawsuit against the school district, arguing that the AI detection software used by the teacher was unreliable and had falsely accused the student of cheating. According to NBC News, the lawsuit claimed that the student faced academic penalties based solely on the software’s determination, without additional evidence of misconduct.

The plaintiff alleged that the school violated the student’s due process rights and failed to follow its own disciplinary procedures. What makes this case particularly noteworthy is that it’s one of the first legal challenges to address the use of AI detection tools in educational settings.

The school district initially defended its actions, stating that its policies clearly prohibited the use of AI tools for assignments unless explicitly authorized by teachers. However, they’ve since been forced to reconsider their approach as the lawsuit has progressed.

As this legal battle unfolds in Massachusetts, schools nationwide are watching closely. The outcome could set important precedents for how educational institutions implement and enforce policies around AI use and detection.

Understanding AI Detection Tools in Education

So how exactly do these AI detectors work? I’ll break it down in simple terms.

AI detection tools analyze text to identify patterns and characteristics that might indicate machine-generated content. They look for things like unusual consistency in writing style, predictable sentence structures, or language patterns that match known AI writing models like ChatGPT or Claude.

Think of them as the digital equivalent of a teacher who’s read thousands of student essays and can spot when something doesn’t sound like a teenager wrote it. Except these tools use algorithms and statistical models instead of years of teaching experience.

Some popular AI detection tools used in educational settings include:

- Turnitin’s AI writing detection feature

- GPTZero

- ZeroGPT

- Copyleaks AI Content Detector

- Originality.ai

Here’s the problem, though: none of these tools are perfect. Far from it, actually. Studies have shown that AI detectors can have false positive rates ranging from 10% to as high as 40% depending on the tool and type of content being analyzed. This means they might flag genuinely human-written text as AI-generated, which is exactly what the Hingham High School students claim happened to them.

Several factors can trigger false positives:

- Students with highly developed writing skills

- Non-native English speakers who use more formal language

- Writing that follows rigid academic structures

- Content on common topics that might resemble training data

Another issue is that these tools often provide confidence scores (“85% likely to be AI-generated”) without explaining which specific parts of the text triggered the alert. This lack of transparency makes it difficult for students to defend their work.

The Hingham case has raised important questions about whether these tools are reliable enough to base academic penalties on – especially when those penalties can affect students’ academic records and future opportunities.

The Legal Arguments in the Hingham Case

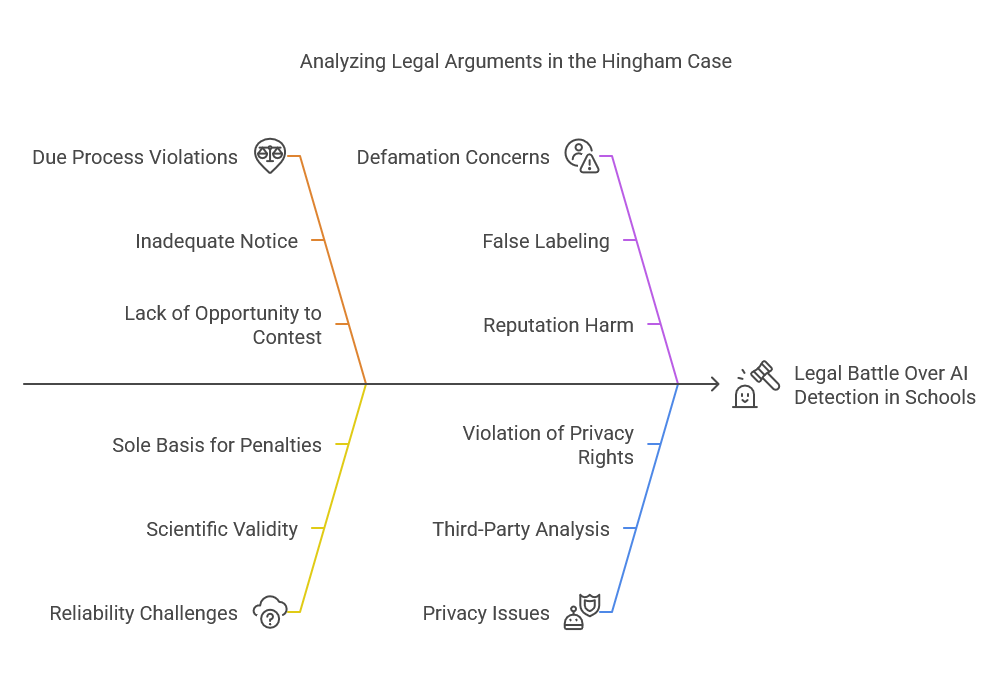

The legal battle unfolding in Massachusetts courtrooms centers on several key arguments that could have far-reaching implications.

The student plaintiff’s legal team has built their case on several foundational claims:

- Due process violations: They argue that the school failed to provide adequate notice about the use of AI detection tools and didn’t give students a meaningful opportunity to contest the results.

- Reliability challenges: A central argument challenges the scientific validity of AI detection tools, arguing they’re not reliable enough to serve as the sole basis for academic penalties.

- Defamation concerns: The lawsuit suggests that falsely labeling a student as a cheater based on flawed technology constitutes defamation and can cause significant harm to their reputation.

- Privacy issues: Questions have been raised about whether analyzing student work through third-party AI detection tools violates student privacy rights under educational privacy laws.

On the other side, the school district’s defense has centered around:

- Clear policy communication: They maintain that their academic integrity policies clearly prohibited unauthorized AI use and that students were adequately informed.

- Teacher discretion: The district argues that the AI tool was just one factor in identifying potential violations, and teacher judgment played a significant role.

- Educational necessity: They contend that schools must have tools to maintain academic integrity in an era when AI writing tools are widely accessible.

According to Hinckley Allen, a law firm analyzing the case, “This lawsuit underscores the critical importance of having well-documented AI policies in educational institutions. Schools implementing AI detection tools should carefully consider how these technologies are integrated into their academic integrity frameworks.”

The case raises interesting questions about evidence standards in academic settings. Unlike criminal proceedings with their “beyond reasonable doubt” standard, schools typically operate on a “preponderance of evidence” basis. But is an AI detection tool’s determination sufficient to meet even this lower standard?

Several education law experts have suggested that this case might establish new guidelines for how schools must document and defend technology-based academic integrity determinations.

Broader Implications for Educational Policies

This lawsuit isn’t happening in isolation – it’s part of a much larger conversation about AI in education that’s forcing schools nationwide to rethink their policies.

I’ve noticed that many schools are scrambling to update their academic integrity guidelines, often without fully understanding the technology they’re trying to regulate. This reactive approach can create inconsistent and problematic policies.

Some school districts have taken a hardline approach, banning all AI use and implementing aggressive detection measures. Others are moving toward more nuanced policies that acknowledge AI’s growing role in the workplace and focus on teaching appropriate use rather than prohibition.

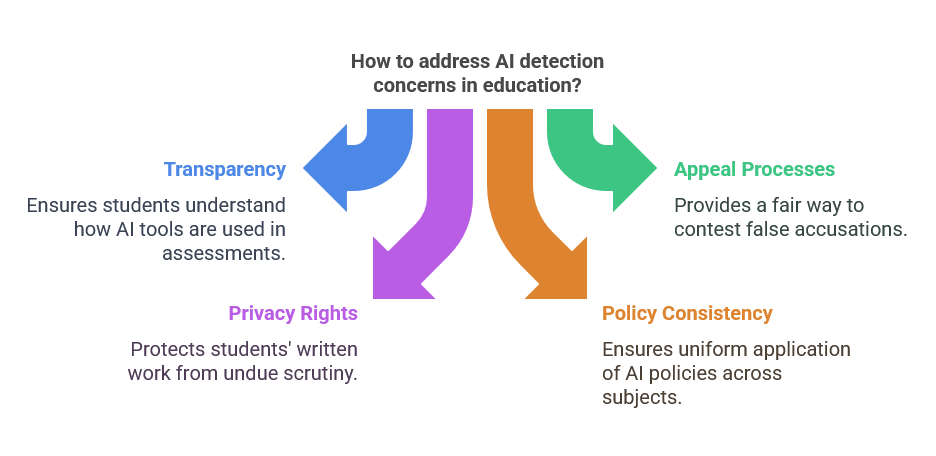

The smarter schools are developing comprehensive AI policies that:

- Clearly define what constitutes appropriate and inappropriate AI use

- Establish fair processes for investigating suspected violations

- Require multiple forms of evidence beyond just AI detection tools

- Provide appeal mechanisms for students who believe they’ve been falsely accused

- Include education components that teach students about AI literacy

A particularly thoughtful approach comes from districts that are involving students, parents, and teachers in policy development, creating buy-in from all stakeholders.

The Hingham case highlights the importance of transparency in these policies. Schools using AI detection tools should be clear about:

- Which specific tools they’re using

- How these tools factor into academic integrity decisions

- The known limitations and error rates of these technologies

- How students can contest determinations they believe are incorrect

Education policy experts suggest that schools should document their decision-making processes when implementing AI-related policies. This documentation could prove crucial if legal challenges arise.

As one education technology administrator I spoke with put it: “The lesson from Hingham isn’t that schools shouldn’t use AI detection – it’s that they need to use it responsibly, with human oversight, and with fair processes for students to appeal.”

Student Perspectives and Rights

Let’s take a moment to consider this from the students’ perspective. Imagine pouring hours into an essay, crafting what you believe is your best work, only to be told by an algorithm that you couldn’t possibly have written it yourself.

For the students at Hingham High School, this wasn’t a hypothetical scenario – it was their reality. And the psychological impact of being labeled a cheater shouldn’t be underestimated.

“There’s this profound sense of helplessness when you’re accused by a machine,” explained one education advocate I interviewed. “How do you prove a negative? How do you prove you didn’t use AI when the only evidence is an algorithm’s determination?”

Students across age groups are increasingly concerned about their rights in an educational landscape transformed by AI. Some key concerns include:

- Being falsely accused based on writing styles that happen to share characteristics with AI-generated text

- Lack of transparency about how determinations are made

- Uneven implementation of AI policies across different teachers and subjects

- Having their natural writing progress scrutinized if they improve too quickly

For college-bound students, these concerns take on added weight. Academic integrity violations can appear on transcripts and affect college applications. In competitive admissions environments, such marks can be devastating.

Student advocacy groups have begun highlighting the rights students should expect when it comes to AI detection:

- The right to know when their work is being analyzed by AI detection tools

- The right to understand how these tools factor into grading and integrity assessments

- The right to contest false positives with meaningful appeal processes

- The right to privacy regarding their written work

As one high school junior told me when discussing the case: “It’s like we’re guilty until proven innocent. And proving you didn’t use AI is nearly impossible if the school has already decided the detector is right.”

What Parents and Educators Should Know

If you’re a parent or teacher reading this, you might be wondering what you should do with this information. How can you navigate this new AI landscape responsibly?

For parents, here are some key questions to ask your child’s school:

- Does the school use AI detection tools? If so, which ones?

- What is the school’s process when AI content is suspected?

- How can students appeal if they believe they’ve been falsely accused?

- What evidence beyond AI detection is required before academic penalties are applied?

- How is the school teaching students about appropriate AI use rather than just policing it?

Parents should also have open conversations with their children about AI tools – what they are, when they’re appropriate to use, and the importance of academic integrity in the digital age.

For educators, implementing best practices around AI detection means:

- Using detection tools as just one piece of evidence, not the entire case

- Keeping up with research on detection tool accuracy and limitations

- Designing assignments that are more resistant to AI completion (personal reflections, in-class writing, project-based assessments)

- Creating classroom environments where students feel comfortable discussing AI use

- Documenting all steps in the academic integrity process

One middle school teacher I spoke with has taken a particularly innovative approach: “Instead of just trying to catch AI use, I’ve redesigned my assignments to incorporate AI tools intentionally. Students learn to use AI as a brainstorming partner or editor, but they still have to demonstrate their own thinking.”

This approach acknowledges a reality many educators are facing: AI is here to stay, and teaching students to use it ethically may be more productive than trying to ban it entirely.

Future Outlook and Potential Outcomes

So where does the Hingham case go from here, and what might it mean for the future of education?

Legal experts I’ve consulted suggest several possible outcomes:

- Settlement: The most likely scenario is that the school district settles the case, perhaps implementing policy changes without admitting fault.

- Judicial ruling: If the case proceeds to judgment, a court ruling could establish important precedents about schools’ obligations when using AI detection tools.

- Policy reforms: Regardless of the legal outcome, the publicity around the case is already pushing schools nationwide to review their AI policies.

- Technology improvements: Detection tool developers may respond by improving accuracy and providing more transparent explanations for their determinations.

Looking beyond this single case, the integration of AI in education is just beginning. We’re likely to see:

- More sophisticated AI detection tools with lower false positive rates

- Greater emphasis on designing “AI-proof” assignments that focus on in-class work and personal reflection

- Increased incorporation of AI literacy into standard curriculum

- Evolution toward teaching appropriate AI use rather than blanket prohibitions

One thing is certain: the Hingham lawsuit marks just the beginning of what will likely be years of legal and policy development around AI in education. The tension between maintaining academic integrity and recognizing AI’s legitimate role in the workplace will continue to challenge educational institutions.

As schools develop more nuanced approaches, they’ll need to balance competing priorities: preparing students for a future where AI is commonplace while still ensuring they develop fundamental skills and genuine understanding.

Conclusion

The Hingham High School AI lawsuit stands at the intersection of education, technology, and law – a perfect storm that highlights how unprepared many schools are for the AI revolution.

As this case makes its way through the legal system, it’s sending a clear message to educational institutions everywhere: hasty implementation of AI detection without proper policies, transparency, and appeal mechanisms is legally risky and potentially harmful to students.

For students and parents, the case underscores the importance of understanding your school’s AI policies and advocating for fair practices. For educators and administrators, it’s a wake-up call to develop thoughtful approaches that balance academic integrity with student rights.

I believe the most productive path forward isn’t about choosing between embracing AI completely or trying to ban it entirely. Instead, it’s about developing ethical frameworks for its use, teaching students to understand its capabilities and limitations, and creating assessment methods that value human creativity and critical thinking.

The outcome of the Hingham lawsuit will likely influence how schools nationwide approach these challenges. But regardless of the legal result, the conversation it has started is already changing how we think about education in the age of artificial intelligence.

What do you think? Is your school handling AI policies effectively? Have you or someone you know experienced issues with AI detection tools? I’d love to hear your thoughts and experiences in the comments below.

Frequently Asked Questions

What exactly happened in the Hingham High School AI lawsuit?

A student at Hingham High School in Massachusetts was accused of using AI to write an assignment based on an AI detection tool’s determination. The student and their parents filed a lawsuit claiming the accusation was false, the detection tool was unreliable, and the school violated proper procedures in handling the situation.

Can AI detection tools wrongly accuse students of cheating?

Yes, AI detection tools are known to have false positive rates, meaning they sometimes identify human-written content as AI-generated. Research suggests these false positive rates can range from 10% to 40% depending on the tool and the type of content analyzed.

How accurate are AI content detection tools?

Current AI detection tools have significant limitations. Their accuracy varies widely depending on the specific tool, the type of content being analyzed, and the writing style. No detection tool currently available can claim 100% accuracy, and most have noteworthy error rates that make them problematic when used as the sole basis for academic penalties.

What rights do students have when accused of using AI?

Students typically have the right to be informed of allegations against them, to present their side of the story, to see the evidence being used, and to appeal decisions they believe are unfair. However, specific rights vary by school district and state. The Hingham lawsuit may help establish clearer standards for student rights in AI-related cases.

How are schools developing AI policies?

Schools are taking varied approaches to AI policy development. Some are implementing strict prohibitions on AI use with aggressive detection measures, while others are developing more nuanced policies that specify when AI use is appropriate and when it isn’t. The most effective policies tend to include clear definitions, fair investigation processes, appeal mechanisms, and educational components about ethical AI use.

What should parents know about AI tools in their children’s schools?

Parents should ask whether their children’s schools use AI detection tools, understand the processes for investigating suspected AI use, know what appeal mechanisms exist, and find out how the school is teaching responsible AI use rather than just policing it. Open family discussions about AI ethics and appropriate use are also important.

How might this lawsuit change how schools use AI detection?

This lawsuit could establish new standards for how schools implement AI detection, potentially requiring them to provide more transparency about their methods, use multiple forms of evidence beyond just detection tools, create robust appeal processes, and document their decision-making more thoroughly when investigating suspected AI use.

What are best practices for schools implementing AI policies?

Best practices include using detection tools as just one piece of evidence rather than the entire case, staying current on detection technology limitations, designing assignments that assess deeper understanding, creating open dialogue about appropriate AI use, providing clear guidelines on when AI can be used, and establishing fair appeal processes for contested determinations.